AI Subscription vs H100

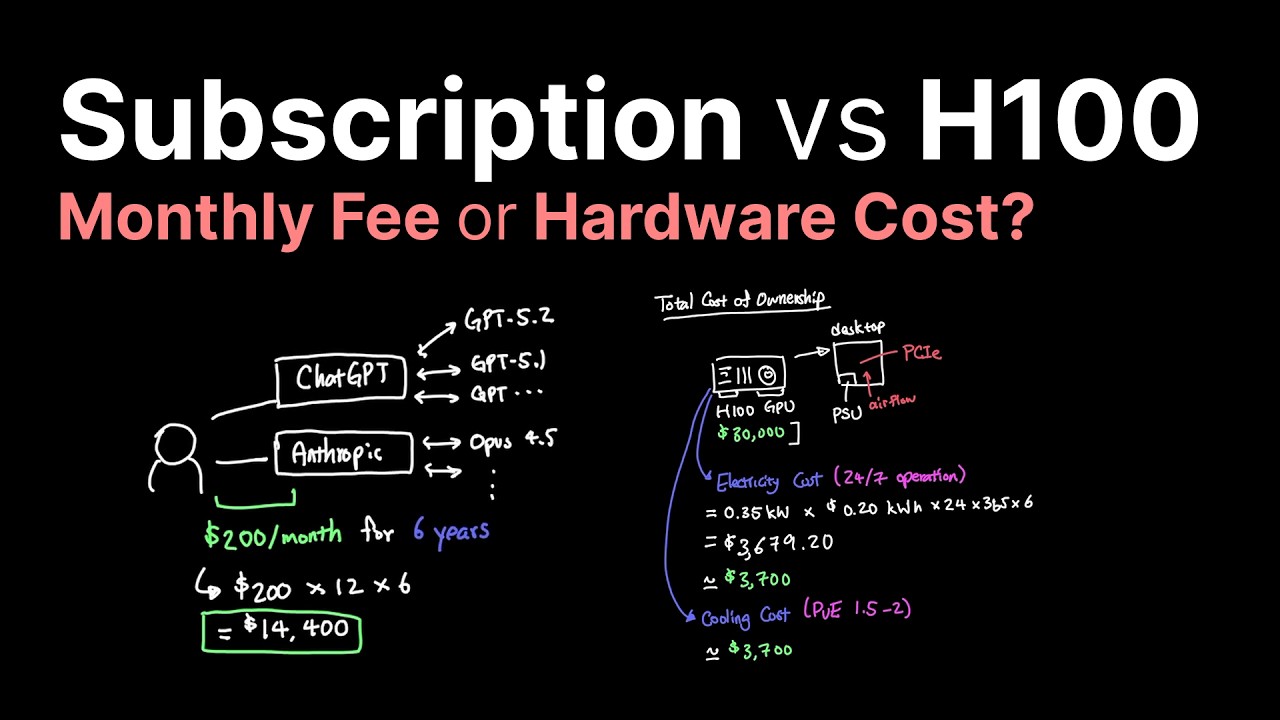

Paying subscription cost or API cost is adding up, and with the cost of intelligence and GPU improvements, at what point should we pool our money today to purchase NVIDIA hardware up front? As we look at different pricing models in AI and also different model architectures that we are seeing in the industry, let's look at our option in this pricing tier for heavy users that are using Claude Code heavily. #ai #llm #artificialintelligence #nvidia #graphicscard Zo Computer: https://zo.computer Chapters 00:00 Intro 00:50 Pricing Model 02:00 Buy H100 02:38 TCO 04:04 LLM Architecture 05:14 Buy DGX H100 05:58 Sponsor: Zo Computer 07:02 Inference 08:30 API Providers 09:52 Conclusion

Video Chapters

- 0:12 Running AI on your own H100 vs. Cloud GPUs

- 1:17 Neocloud renting costs vs. simple subscriptions

- 2:38 The true cost of ownership: Power and cooling

- 3:55 Can a single H100 actually run a SOTA model?

- 5:14 What it costs to run Kimi K2 Thinking locally

- 7:03 Analyzing VRAM limitations for massive models

- 8:30 Why frontier labs don't lose money on cheap AI

Original Output

0:12 Running AI on your own H100 vs. Cloud GPUs 1:17 Neocloud renting costs vs. simple subscriptions 2:38 The true cost of ownership: Power and cooling 3:55 Can a single H100 actually run a SOTA model? 5:14 What it costs to run Kimi K2 Thinking locally 7:03 Analyzing VRAM limitations for massive models 8:30 Why frontier labs don't lose money on cheap AI Timestamps by StampBot 🤖 (452-ai-subscription-vs-h100)

Unprocessed Timestamp Content

0:00 AI is so cheap now; less than $5 per million tokens 0:06 Subscription costs for state-of-the-art LLMs, up to $200 a month 0:12 What if you ran AI on your own H100 GPU or rented cloud GPUs? 0:22 Examining the total cost of ownership for running LLMs 0:50 Subscription costs for 6 years vs buying a $30,000 H100 GPU 1:17 Renting an H100 from Neoclouds costs more than a subscription 1:50 How do frontier labs truly make money with their subscription model? 2:00 Pooling money with friends to buy an H100 GPU for personal use 2:38 Calculating the total cost of ownership for an H100, electricity, and cooling 3:55 Can one H100 GPU actually fit a state-of-the-art open model? 4:45 You need at least 14 H100 GPUs to fit a 1T parameter model 5:14 Buying an Nvidia DGX H100 for $400,000 to run Kimi K2 Thinking 5:58 Zo Computer: your private cloud computer for data, AI, and coding 7:03 The math on Kimi K2 Thinking model size and VRAM limitations 8:30 Why API providers and frontier labs don't lose money on cheap AI 9:38 Data parallelism, tensor parallelism, model parallelism, and expert parallelism make it work Timestamps by StampBot 🤖 (452-ai-subscription-vs-h100)