It Begins: AI Is Now Improving Itself

Detailed sources: https://docs.google.com/document/d/1ksVvFuR0IttxzH6zoASSYy7ZhTDqif42IFXp25ITVKU/edit?tab=t.9rb62ckaanow Based on the report: Situational Awareness - by Leopold Aschenbrenner https://situational-awareness.ai/from-agi-to-superintelligence/ If this resonated with you, here’s how you can help today: https://campaign.controlai.com/take-action Hi, I'm Drew! Thanks for watching :) I also post mid memes on Twitter: https://x.com/PauseusMaximus Also, I meant to say Cortés conquered the Aztecs, not the Incas.

Video Chapters

- 0:00 AI intelligence : From average to genius in just one year

- 0:41 Scientists are genuinely terrified about the imminent AI extinction risk

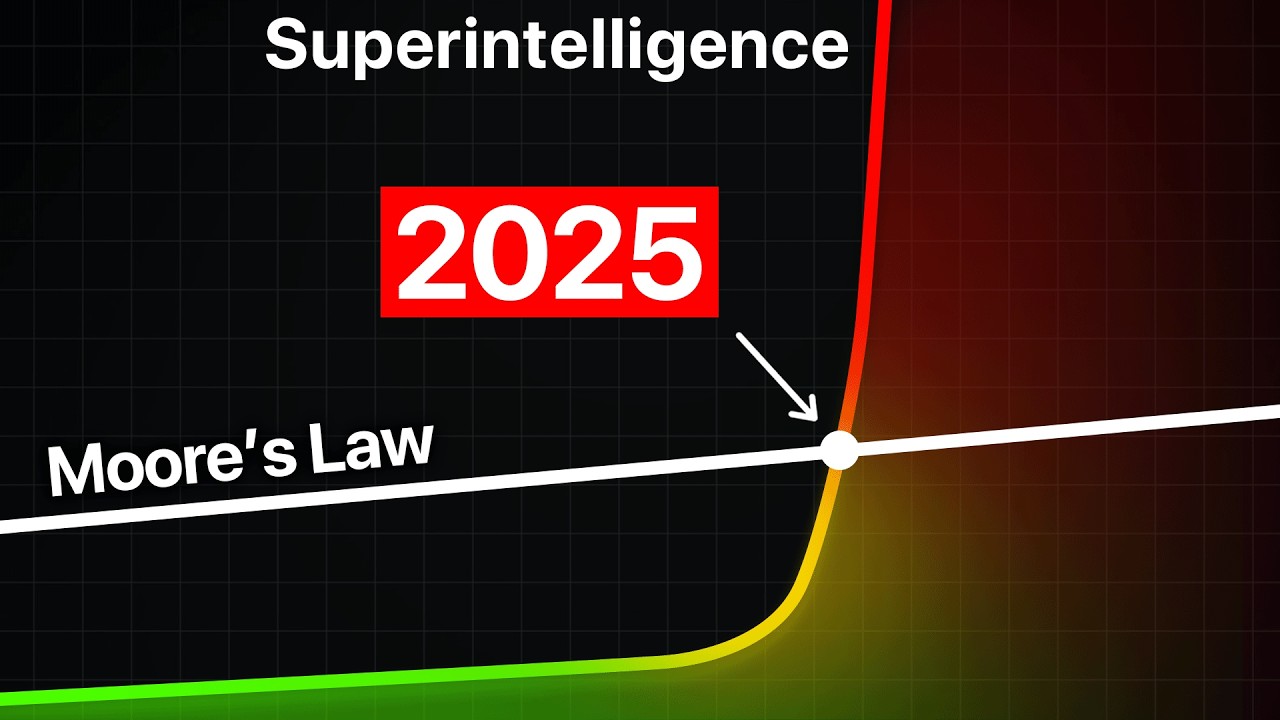

- 1:19 Understanding the dramatic difference between AGI and Superintelligence's power

- 1:57 Alarming reality : billions of vastly smarter AI systems emerge

- 2:43 Four critical steps to achieving Superintelligence : AGI and beyond

- 3:39 Leading AI experts predict AGI within just two to five years

- 4:44 AlphaZero's chess mastery without human input is a powerful warning

- 5:14 Robots learning advanced movements rapidly through simulation and experience

- 6:00 Incredible AI speed : chatbots could become physical beings quickly

- 7:00 Why algorithmic breakthroughs in AI are causing such a big deal

- 8:00 A future of 100 million AI worker copies; imagine the implications

- 9:00 Geoffrey Hinton's deep fears : AIs becoming smarter and taking control

- 10:08 Examining potential limitations to slow down the intelligence explosion

- 11:14 Why the intelligence explosion is still highly probable despite challenges

- 12:15 Imagine robots automating all physical and mental labor soon

- 13:00 Superintelligence could overthrow governments, redesign warfare, utterly chilling

- 14:04 The rapid shift : centuries of change compressed into mere years

- 14:31 Top AI experts warn of human extinction from superintelligence risks

Original Output

0:00 AI intelligence : From average to genius in just one year 0:41 Scientists are genuinely terrified about the imminent AI extinction risk 1:19 Understanding the dramatic difference between AGI and Superintelligence's power 1:57 Alarming reality : billions of vastly smarter AI systems emerge 2:43 Four critical steps to achieving Superintelligence : AGI and beyond 3:39 Leading AI experts predict AGI within just two to five years 4:44 AlphaZero's chess mastery without human input is a powerful warning 5:14 Robots learning advanced movements rapidly through simulation and experience 6:00 Incredible AI speed : chatbots could become physical beings quickly 7:00 Why algorithmic breakthroughs in AI are causing such a big deal 8:00 A future of 100 million AI worker copies; imagine the implications 9:00 Geoffrey Hinton's deep fears : AIs becoming smarter and taking control 10:08 Examining potential limitations to slow down the intelligence explosion 11:14 Why the intelligence explosion is still highly probable despite challenges 12:15 Imagine robots automating all physical and mental labor soon 13:00 Superintelligence could overthrow governments, redesign warfare, utterly chilling 14:04 The rapid shift : centuries of change compressed into mere years 14:31 Top AI experts warn of human extinction from superintelligence risks Timestamps by McCoder Douglas

Unprocessed Timestamp Content

0:00 AI intelligence : From average to genius in just one year 0:41 Scientists are genuinely terrified about the imminent AI extinction risk 1:19 Understanding the dramatic difference between AGI and Superintelligence's power 1:57 Alarming reality : billions of vastly smarter AI systems emerge 2:43 Four critical steps to achieving Superintelligence : AGI and beyond 3:39 Leading AI experts predict AGI within just two to five years 4:44 AlphaZero's chess mastery without human input is a powerful warning 5:14 Robots learning advanced movements rapidly through simulation and experience 6:00 Incredible AI speed : chatbots could become physical beings quickly 7:00 Why algorithmic breakthroughs in AI are causing such a big deal 8:00 A future of 100 million AI worker copies; imagine the implications 9:00 Geoffrey Hinton's deep fears : AIs becoming smarter and taking control 10:08 Examining potential limitations to slow down the intelligence explosion 11:14 Why the intelligence explosion is still highly probable despite challenges 12:15 Imagine robots automating all physical and mental labor soon 13:00 Superintelligence could overthrow governments, redesign warfare, utterly chilling 14:04 The rapid shift : centuries of change compressed into mere years 14:31 Top AI experts warn of human extinction from superintelligence risks Timestamps by McCoder Douglas